What is Phi-2, Microsoft’s latest compact language model?

Microsoft’s latest generative AI model is more efficient and capable than larger language models.

“In the realm of large language models (LLM) such as GPT-4 and Bard, Microsoft has introduced a new compact language model known as Phi-2, featuring 2.7 billion parameters and serving as an upgraded version of Phi-1.5. Currently accessible through the Azure AI Studio model catalog, Microsoft asserts that Phi-2 can surpass larger models like Llama-2, Mistral, and Gemini-2 in various generative AI benchmark tests.

Phi-2, initially announced by Satya Nadella at Ignite 2023 and unveiled this week, was developed by Microsoft’s research team. The generative AI model is purported to possess attributes like “common sense,” “language understanding,” and “logical reasoning.” According to Microsoft, Phi-2 can even outperform models 25 times its size on specific tasks.

Microsoft’s Phi-2 Small Language Model (SLM) undergoes training using “textbook-quality” data, encompassing synthetic datasets, general knowledge, theory of mind, daily activities, and more. As a transformer-based model with next-word prediction capabilities, Phi-2 was trained on 96 A100 GPUs over 14 days. This training duration suggests that it is more straightforward and cost-effective to train Phi-2 on specific data compared to the resource-intensive GPT-4, which requires around 90-100 days of training with tens of thousands of A100 Tensor Core GPUs.

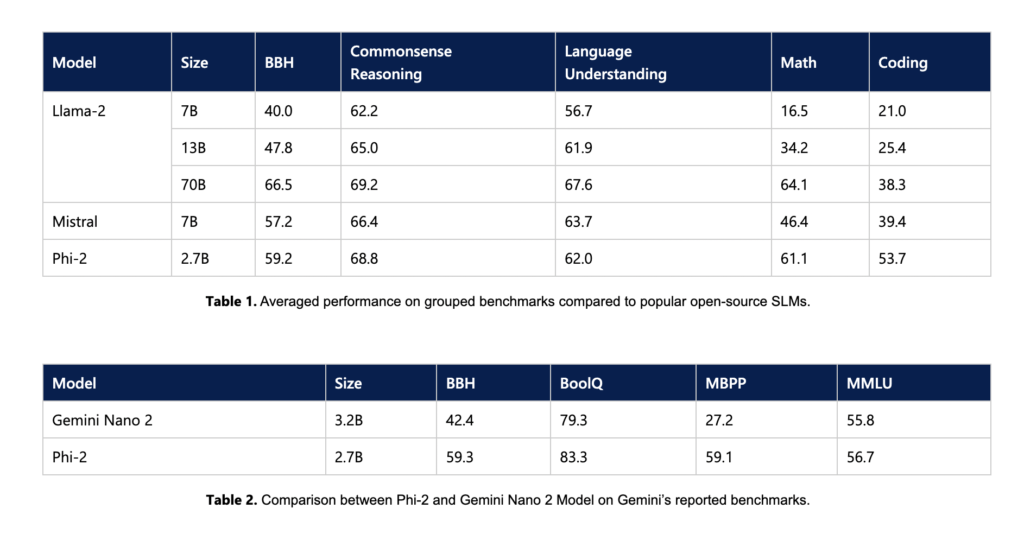

Phi-2 from Microsoft demonstrates proficiency in solving intricate mathematical equations and physics problems, even detecting errors in student calculations. Outperforming larger language models like Llama-2 on benchmarks related to commonsense reasoning, language understanding, math, and coding, Phi-2 exhibits superiority over the 13B Llama-2 and 7B Mistral. Additionally, it surpasses the 70B Llama-2 LLM and even outperforms the Google Gemini Nano 2, a 3.25B model that can natively run on Google Pixel 8 Pro.

The advantage of a smaller model like Phi-2 outperforming larger counterparts lies in its cost-effectiveness, lower power consumption, and reduced computing requirements. These models can be tailored for specific tasks and seamlessly run on devices, minimizing output latency. Developers can access the Phi-2 model on Azure AI Studio.